Everything You Need to Know About AI Neural Networks

Key Takeaways

- Brain-Inspired Design: Neural networks mimic the structure and function of the human brain

- Layered Architecture: Networks consist of input, hidden, and output layers that process information

- Learning Process: Networks improve through training on data, adjusting weights and connections

- Specialized Types: Different network architectures (CNN, RNN, etc.) excel at specific tasks

- Widespread Applications: Neural networks power advances in computer vision, language processing, and more

Understanding Neural Networks

At the heart of Deep Learning lies Artificial Neural Networks (ANNs), a concept inspired by the human brain's biological neural networks. Developed to think and make analytical decisions like humans, ANNs allow computers to recognize patterns and solve common problems in various fields of AI.

The Building Blocks

Neural networks are composed of interconnected nodes (artificial neurons) organized in layers:

Nodes and Edges

Similar to biological neurons, artificial nodes receive, process, and transmit information. The connections between nodes (edges) have associated weights that determine the strength of the signal.

Layer Structure

- Input Layer: Receives initial data (images, text, etc.)

- Hidden Layers: Process information through multiple transformations

- Output Layer: Produces the final result (classification, prediction, etc.)

Activation and Processing

Each node processes inputs by:

- Calculating a weighted sum of inputs

- Adding a bias term

- Applying an activation function (like ReLU, sigmoid, or tanh)

- Passing the result to the next layer if it exceeds a threshold

The Learning Process

Neural networks aren't explicitly programmed for specific tasks—they learn from data:

Training Methodology

- Forward Propagation: Data flows through the network to generate an output

- Error Calculation: The difference between predicted and actual output is measured

- Backpropagation: The error is propagated backward to adjust weights

- Optimization: Weights are updated to minimize error in future predictions

Data Requirements

The performance of neural networks improves with more training data. Larger, more diverse datasets lead to better generalization and accuracy.

Types of Neural Networks

Different neural network architectures are designed for specific types of problems:

Feed-Forward Neural Networks (FFNNs)

The most fundamental type of neural network where information flows only in the forward direction—from input to output without any feedback loops.

Key Characteristics:

- Unidirectional information flow

- No memory of previous inputs

- Foundation for many other network types

Applications:

- Classification problems

- Regression analysis

- Simple pattern recognition

Recurrent Neural Networks (RNNs)

Networks with feedback connections that maintain a memory of previous inputs, making them ideal for sequential data.

Key Characteristics:

- Feedback loops create "memory"

- Can process sequences of variable length

- Maintains internal state

Applications:

- Natural language processing

- Speech recognition

- Time series prediction

- Machine translation

Convolutional Neural Networks (CNNs)

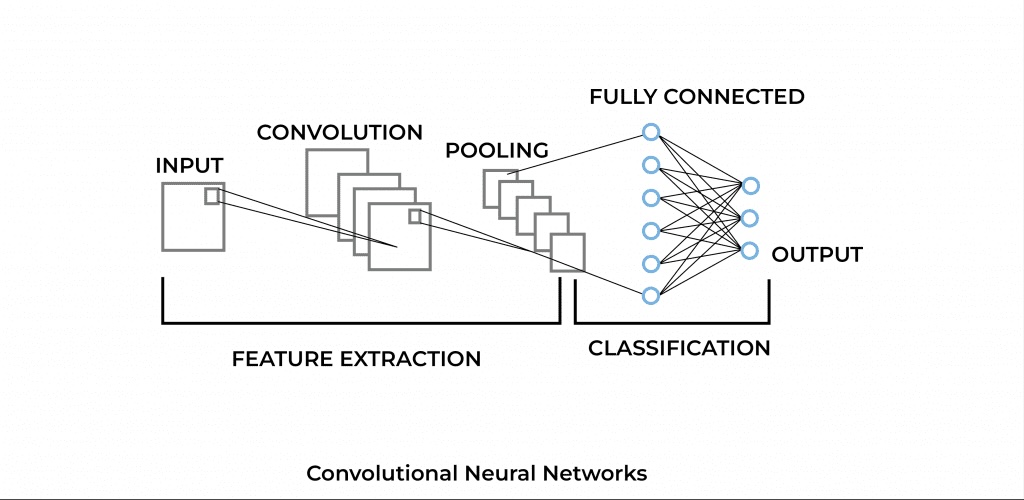

Structure of a CNN showing convolutional, pooling, and fully connected layers

Structure of a CNN showing convolutional, pooling, and fully connected layers

Specialized networks designed primarily for image processing and visual data analysis.

Key Characteristics:

- Uses convolutional layers to detect spatial patterns

- Employs pooling layers to reduce dimensionality

- Preserves spatial relationships in data

Applications:

- Image classification

- Object detection

- Facial recognition

- Document analysis

- Video processing

...(about 20 lines omitted)...

Applications Across Industries

Neural networks have transformed numerous fields:

Computer Vision

- Object Detection: Identifying and localizing objects within images

- Facial Recognition: Verifying identities through facial features

- Medical Imaging: Detecting anomalies in X-rays, MRIs, and CT scans

- Autonomous Vehicles: Perceiving and interpreting the surrounding environment

Natural Language Processing

- Machine Translation: Converting text between languages

- Sentiment Analysis: Determining emotional tone in text

- Text Generation: Creating human-like written content

- Question Answering: Providing relevant responses to natural language queries

Financial Services

- Fraud Detection: Identifying suspicious transaction patterns

- Algorithmic Trading: Making automated investment decisions

- Risk Assessment: Evaluating creditworthiness and insurance risks

- Market Forecasting: Predicting financial market trends

Healthcare

- Disease Diagnosis: Identifying patterns indicative of medical conditions

- Drug Discovery: Accelerating pharmaceutical research

- Patient Monitoring: Analyzing vital signs and predicting complications

- Personalized Medicine: Tailoring treatments to individual genetic profiles

Challenges and Limitations

Despite their power, neural networks face several challenges:

Data Requirements

Networks typically need large amounts of high-quality, labeled data for effective training.

Computational Intensity

Training complex networks requires significant computational resources and time.

Interpretability

The "black box" nature of neural networks makes it difficult to understand how they reach specific conclusions.

Overfitting

Networks may perform well on training data but fail to generalize to new, unseen examples.

Future Directions

The field of neural networks continues to evolve rapidly:

Self-Supervised Learning

Reducing dependence on labeled data by having networks learn from unlabeled examples.

Neuromorphic Computing

Developing hardware that more closely mimics the brain's neural structure.

Multimodal Networks

Creating systems that can process and integrate multiple types of data (text, images, audio).

Ethical AI Development

Addressing bias, fairness, and transparency in neural network applications.

Conclusion

Artificial Neural Networks represent one of the most powerful tools in modern computing, enabling machines to perform tasks that once seemed exclusive to human intelligence. By mimicking the brain's structure and learning processes, these networks have revolutionized fields from computer vision to natural language processing.

As neural network architectures continue to evolve and computing power increases, we can expect even more sophisticated applications that further blur the line between human and artificial intelligence. Understanding the fundamentals of these networks is increasingly important for anyone looking to navigate our AI-enhanced future.

This article provides a historical perspective on neural networks. While Visionify now specializes in computer vision solutions for various industries, we recognize the continuing importance of neural network architectures in powering modern AI systems.

Frequently Asked Questions

Find answers to common questions about this topic

Want to learn more?

Discover how our Vision AI safety solutions can transform your workplace safety.

Schedule a DemoSchedule a Meeting

Book a personalized demo with our product specialists to see how our AI safety solutions can work for your business.

Choose a convenient time

Select from available slots in your timezone

30-minute consultation

Brief but comprehensive overview of our solutions

Meet our product experts

Get answers to your specific questions

Related Articles

Subscribe to our newsletter

Get the latest safety insights and updates delivered to your inbox.